From Comms to Strategy: Where AI Will Have the Biggest Impact In ’24

Communications departments will need to be restructured to prioritize rapid response as misinformation content generated by artificial intelligence gets unleashed on voters next year.

Campaigns had previously approached handling misinformation and disinformation with an approach akin to “I’ll be really loud with the facts,” according to Catherine Algeri, a SVP at Democratic digital firm Do Big Things.

Algeri, who was previously a senior digital advisor to Kamala Harris’ 2020 presidential, said that going into 2024 “forward thinking campaigns” are going to prioritize their “AI misinformation response time.”

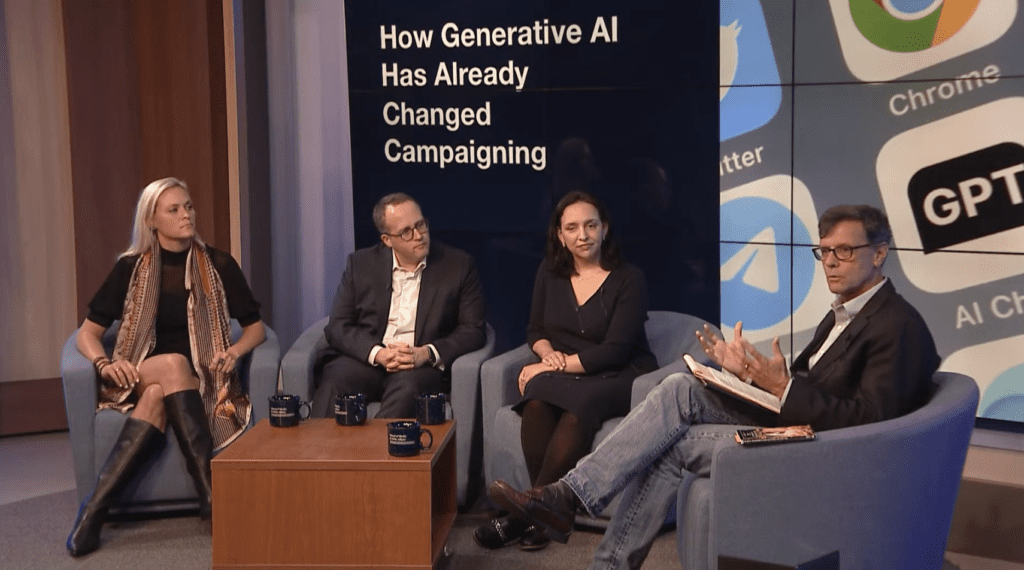

That’s because the previous “loud” truth approach doesn’t work, she said Oct. 19 at a panel discussion hosted by C&E and George Washington University’s School of Media and Public Affairs.

“That doesn’t help you. What you actually need is a stronger counter narrative,” she said. “What we’re going to see is that campaigns are going to have to spend more time specifically on misinformation units.”

Part of the reason why campaigns will see an explosion of content they’ll have to respond to next year is “the power of transcripts,” according to Joe Pounder, CEO of Bullpen Strategy Group.

He noted that AI tools now have the power to transcribe public events in real time whereas that work was previously done manually by practitioners who could spend hours transcribing a video after an event concluded.

“Getting a transcript of an event has been a struggle throughout my 20 years of doing politics,” Pounder said. “[Now], we’re doing it in real time and we’re getting really good transcripts.”

He added: “You have to embrace [AI] from the political and public affairs perspective. You have no choice. You have to learn how to utilize it in your day-to-day. And it’s a matter of scale. It provides a lot of quick resources to any operation that’s going on.

“There’s just so much information to process, and this is true on the data side as well,” he continued. “Two hands can’t do it. If you have an AI model in which you’re putting a lot of content into and able to process that information into quick content, it really speeds up how fast you can scale.”

Still, the impact from these tools could be more significant in two to four years. By that time, Pounder said, practitioners dissecting campaigns will ask themselves: “Hey, did that campaign win because it had the better AI model?”

Kristin Davison, VP at GOP mega-firm Axiom Strategies, highlighted a fairly universal caution political practitioners have noted when it comes to AI: “[You can’t] relate and inspire with AI,” she said, noting it’s more of a question of “how can you use AI to get that candidate’s human emotion out to people?”

Algeri echoed Davison, noting that there are some areas where AI tools can’t help campaigns and one of those is innovation. On that point, Algeri said AI may be more immediately used to help clear space for campaign staff and consultants to focus on strategic priorities.

“In the grind of the campaigns, there are always these other big strategic priorities that staff can’t focus on,” she said. “But now they can use AI to generate a draft [of a fundraising email], go through it, make that process happen a lot faster and then make time to talk to their colleagues about those bigger strategic initiatives.”